![]()

Introduction for Tensorflow Control Practice:

In recent blog series on tensorflow we have learned about following concepts

- Constant, variables and placeholders in tensorflow

- Optimizers and loss function

- Graphs, session and tensorboard

If you face any problem in graphs, session, constants, optimizer and control flow in tensorflow, please refer to below tutorial of the series:

Tensorflow tutorial from basics for beginners- Part1 | AI Sangam

Optimize Parameters Tensorflow Tutorial –Part2 |AI Sangam

Low Level Introduction of Tensorflow in a simple way-Part 3| AI Sangam

This tutorial will cover Tensorflow Control Practice i.e live codes for each control along with their session and graphs. Please look at below list to know more what this specific tutorial is focused on.

- Code with explanation for Dependencies

- Code with explanation for conditional statement

- Code with explanation for Loop

1. Code with explanation for Dependencies

Theory and points for dependencies as well different types are discussed in the previous part. If you want to read about it in detail, please don’t miss to read previous blog on tensorflow series. Please click on this link for it. Here, we will write a live code for each of the dependencies and visualize each of it in the tensorboard to know more about edges and nodes.

a.) tf.control_dependencies:- Please look at the below code. Since this is the practical section hence there would be codes and graphs as promised above.

import tensorflow as tf

#Variables and placeholders are defined here

x = tf.placeholder(tf.int32, shape=[], name='x')

y = tf.Variable(2, dtype=tf.int32)

#assign op is set here

assign_op = tf.assign(y, y *2)

# Multiplication is set inside a control dependency which is executed first

with tf.control_dependencies([assign_op]):

output = x * y

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

writer = tf.summary.FileWriter('session_tensorflow_1', sess.graph)

for i in range(3):

print('output:', sess.run(output, feed_dict={x: 1}))

Explanation of the code above

- x is the placeholder where different values can be fed. If shape is not defined we can feed a tensor of any shape. x is the name for the operation.

- y is variable whose value will change and to make this happen we will use global_variables_initializer() which will be used inside the session.

- Now, we will write assign_op which is assigning value of y equals to y*2 and this will be passed as an argument to tf.control_dependencies which will be executed first or before output = x*y. We had discussion on this in our previous blog so please read from there.

- Value of x is passed as 1 in the loop 3 times and each time new value of y is assigned first in the tf.control_dependencies block and then output is calculated. Please see the output as below:-

output: 4

output: 8

output: 16

Let us see the result in the tensorboard. Please run the below command to run the event in the tensorboard

tensorboard --logdir=session_tensorflow_1 #write the name of folder where event is stored.

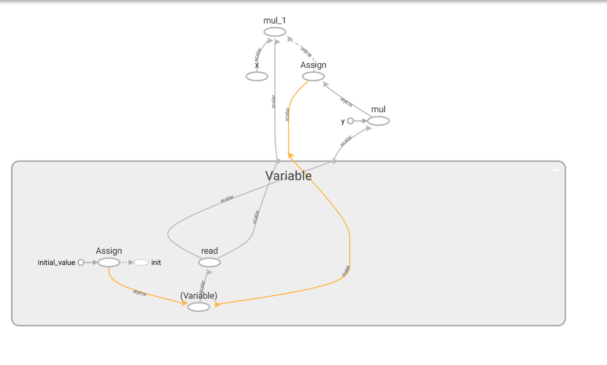

Let us also see the graph for it where we can better knowledge of edges (scalars) and nodes (operations)

b.) tf.group: When you need to implement multiple operation, you can use tf.group. Please see the below code.

import tensorflow as tf

sess = tf.InteractiveSession()

a = tf.Variable(1.0)

b = tf.Variable(10.0)

c = tf.Variable(0.0)

op1 = tf.assign(c, a*2)

with tf.get_default_graph().control_dependencies([op1]):

op2 = tf.assign(c, c*5) # op2 will execute only after op1

# this also implies that first the value of c becomes 2 after op1

# this new value is assigned again a new value after op2

grp = tf.group(op1,op2) #running multiple operations

for i in range(3):

writer = tf.summary.FileWriter('session_tensorflow_3', sess.graph)

sess.run(tf.global_variables_initializer())

sess.run(grp)

print(sess.run(c))

Explanation of this code

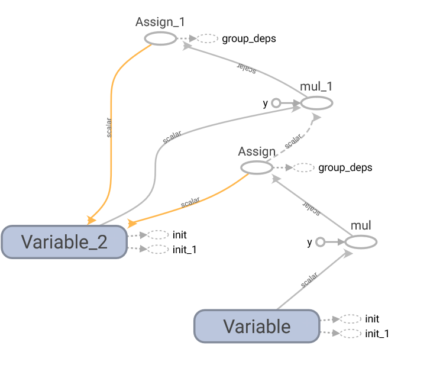

First of all, op1 will be executed. So value of c will be a*2 i.e 2. Control_dependencies will be called in the with loop. Parameter of control_dependencies will be op1. Now value of c will be assigned using op2. Both the operation will be called in tf.group. Please see the graph for this code.

c.) tf.tuple: Definitions and understanding is done in the previous blog on it. Let us move to the code with some explanation and live graphs. Please look below to see the code

import tensorflow as tf

x = tf.placeholder(tf.int32, shape=[3], name='x')

y = tf.placeholder(tf.int32, shape=[3], name='y')

c = tf.math.multiply(x,y,name='c')

d = tf.math.multiply(c,y,name='d')

e = tf.tuple([c,d])

with tf.Session() as sess:

writer = tf.summary.FileWriter('session_tensorflow_4',sess.graph)

print(sess.run(e, {x: [1.0, 2.0, 3.0],y:[1.0, 2.0, 3.0]}))

writer.close()

Explanation of this code

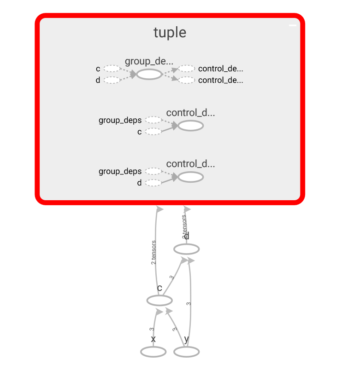

tf.tuple will return the list of tensor. c is the first tensor and d is the second tensor. x and y both are considered as placeholder and hence their values are passed as dictionary. Please see the output and graph as below

[array([1, 4, 9]), array([ 1, 8, 27])]

Let us move towards the conditional statement. Without wasting any time, let us together build the code for tf.cond.

a.) tf.cond:- Example for tf.cond has been taken from stackoverflow so it is moral duty to pay regard to them so here is the link for the code which is being discussed here.

Please have the code

import tensorflow as tf

pred = tf.constant(True)

x = tf.Variable([1])

assign_x_2 = tf.assign(x, [2])

def update_x_2():

with tf.control_dependencies([assign_x_2]):

return tf.identity(x)

y = tf.cond(pred, update_x_2, lambda: tf.identity(x))

with tf.Session() as sess:

writer = tf.summary.FileWriter('session_tensorflow_5', sess.graph)

sess.run(tf.initialize_all_variables())

print(y.eval())

Explanation of the code

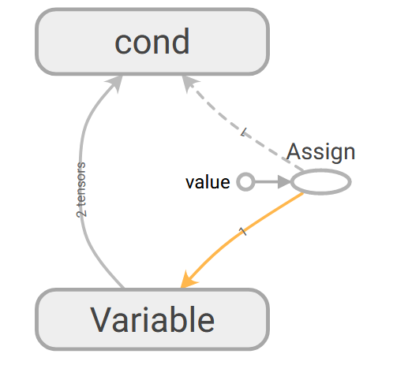

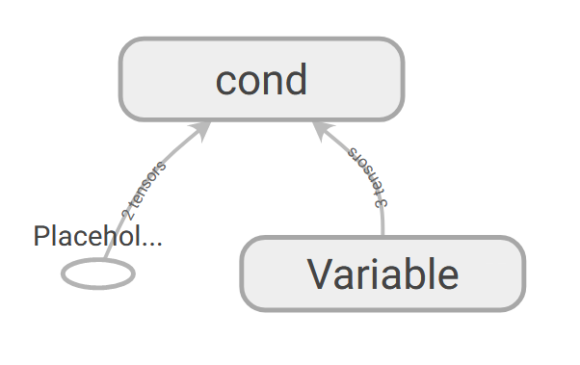

If you make the value of pred = False, output will not change. Please understand this and with this we will be able to understand the code. Reason is if you look at both branches in the argument of tf.cond (update_x_2, lambda: tf.identity(x)) both uses the value of x after assigning it, hence it makes no sense whether pred = true or false. Output remains same. Please understand that Any Tensor or operation which is created outside true_function (update_x_2) and false_function (lambda: tf.identity(x)) will be executed first. To understand in more details, please see the below graph which is created after running this code.

How to resolve the problem?

If we read the document carefully, if we put the assign_x_2 = tf.assign(x, [2]) inside the control_dependencies, it will help to resolve the error. You can find the elaborate answer at the stackoverflow whose link has been provided upwards. This will enable to update the value of x in the function update_x_2 while keeping the value same in the function lambda: tf.identity(x). This will help to differentiate between true function and false function inside tf.cond. Please see the below code to understand more.

import tensorflow as tf

pred = tf.placeholder(tf.bool, shape=[])

x = tf.Variable([1])

def update_x_2():

with tf.control_dependencies([tf.assign(x, [2])]):

return tf.identity(x)

y = tf.cond(pred, update_x_2, lambda: tf.identity(x))

with tf.Session() as session:

writer = tf.summary.FileWriter('session_tensorflow_6', session.graph)

session.run(tf.initialize_all_variables())

print(y.eval(feed_dict={pred: False}))

print(y.eval(feed_dict={pred: True}))

Output of the code

[1] [2]Graph for the above:

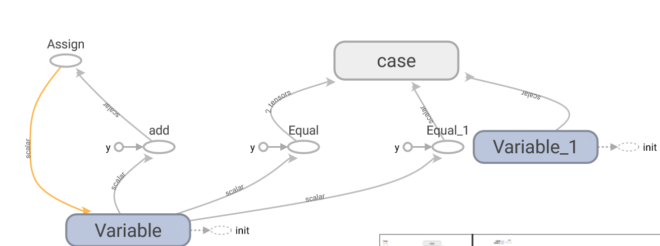

b.) tf.case: It is another type of conditional control, where we subject variables to different conditions and append the result and put it in the tf.case argument. Please see the below code with explanation and graph

import tensorflow as tf

a = tf.Variable(0, dtype=tf.int64)

b = tf.assign(a, a+1)

c = tf.Variable(0.1, dtype=tf.float32)

case=[]

for d,e in [(2, 0.01), (4, 0.001)]:

pred = tf.equal(a,d)

fn_tensor = tf.constant(e,dtype=tf.float32)

case.append((pred,lambda :fn_tensor))

case_selection = tf.case(case,default=lambda: c)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for _ in range(6):

writer = tf.summary.FileWriter('session_tensorflow_7', sess.graph)

print (sess.run([a, case_selection]))

sess.run(b)

Please see the output of this code as below

[0, 0.1]

[1, 0.1]

[2, 0.001]

[3, 0.1]

[4, 0.001]

[5, 0.1]

Explanation of the code:

- a is a variable whose initial value is 0.

- b is assigning the value a = a+1

- c is another value which will become default argument of tf.case if the condition in case is false

- case is a list which is initialized as empty list

- cases are created according to pred = tf.equal(a,d). If condition is true value of e is considered by tf.case else c

Graph for this is below:

a.) tf.while: Now let us move towards loop control. Let us build code for tf.while which is loop execution in tensorflow. Hope you have followed our previous article on how all these operations operate. If not please stay in touch with the previous blog using the below link

Low Level introduction of Tensorflow in a simple way – Part 3| AI Sangam

Please look at the below code to understand tf.while in a better way

import tensorflow as tf

i = tf.constant(4)

c = lambda i: tf.less(i, 9)

b = lambda i: tf.add(i, 2)

r = tf.while_loop(c, b, [i])

with tf.Session() as sess:

writer = tf.summary.FileWriter('session_tensorflow_8', sess.graph)

print(sess.run(r))

Explanation of the code:

- There are three things in the code one is the condition, second is the body where condition is applied and third is the iteration variable whose values gets modified and returned as the output

- Value of i starts from 4 and will end when i becomes 10.

Output of the code:

10

Graph:

Conclusion:

Let us revise what we have learned in this blog about Tensorflow Control Practice. Firstly we have continued the last blog and carried out practical session for different control available in the tensorflow. Each of the control is described with proper codes, output and live graphs. Hope this would create a more depth understanding of tensorflow. Again Explanation of each of the code is also carried point wise. We would keep on working more on tensorflow and bring out simple and understandable blogs on tensorflow. You can follow us different social media which you can find in the footer section of the blog. You can also drop a email at aisangamofficial@gmail.com. You can visit the company official website www.aisangam.com to get to know services and features of our company. If you have any questions related to this blog, please do mention it in the comment section so that we can assist you better.

Also Read: